Overview of Amazon AWS SAA-C03

The AWS Certified Solutions Architect - Associate (SAA-C03) exam is designed to validate your ability to design and deploy scalable, highly available, and fault-tolerant systems on AWS. It covers a broad range of AWS services, including compute, storage, databases, networking, and security. Among these, Amazon S3 is a key service that you must master to excel in the exam.

The SAA-C03 exam tests your understanding of various AWS services and how they interact with each other. It also evaluates your ability to apply best practices for designing and managing cloud-based solutions. Given the importance of data storage in any cloud architecture, Amazon S3 is a critical topic that you need to be well-versed in.

Definition of AWS SAA-C03 Exam

The AWS SAA-C03 exam is an associate-level certification that validates your expertise in designing distributed systems on AWS. It is intended for individuals who have at least one year of hands-on experience designing available, cost-efficient, fault-tolerant, and scalable distributed systems on AWS.

The exam consists of multiple-choice and multiple-response questions that assess your knowledge of AWS services, architectural best practices, and your ability to design solutions that meet specific requirements. Amazon S3 is one of the core services covered in the exam, and understanding its features, limitations, and best practices is essential for success.

Understanding Amazon S3 Buckets

Amazon S3 is an object storage service that offers industry-leading scalability, data availability, security, and performance. It is designed to store and retrieve any amount of data from anywhere on the web. Amazon S3 is widely used for a variety of use cases, including data backup, data archiving, big data analytics, and content distribution.

At the heart of Amazon S3 is the concept of an S3 bucket. A bucket is a container for storing objects (files) in Amazon S3. Each object is stored as a file with metadata, and each bucket can hold an unlimited number of objects. Buckets are unique across the entire AWS platform, meaning that no two buckets can have the same name.

Key Features of Amazon S3 Buckets

- Durability and Availability: Amazon S3 is designed for 99.999999999% (11 nines) durability and 99.99% availability over a given year. This means that your data is highly protected against loss and is readily accessible when needed.

- Scalability: Amazon S3 can scale to store an unlimited amount of data. You can store as much data as you need, and Amazon S3 will automatically scale to accommodate your storage requirements.

- Security: Amazon S3 provides several security features, including encryption, access control policies, and bucket policies, to ensure that your data is secure.

- Versioning: Amazon S3 supports versioning, which allows you to keep multiple versions of an object in the same bucket. This is useful for data recovery and protecting against accidental deletions.

- Lifecycle Management: Amazon S3 allows you to define lifecycle rules to automatically transition objects to different storage classes or delete them after a specified period.

Maximum Size of an S3 Bucket

One of the most common questions about Amazon S3 is, "What is the maximum size of an S3 bucket?" The answer is that there is no maximum size for an S3 bucket. Amazon S3 is designed to store an unlimited amount of data, and you can store as many objects as you need in a single bucket.

However, there are some limitations to be aware of:

- Object Size: The maximum size of a single object in Amazon S3 is 5 terabytes (TB). If you need to store larger files, you can use the multipart upload feature, which allows you to upload a single object as a set of parts.

- Number of Objects: While there is no limit to the number of objects you can store in a bucket, there are some practical considerations. For example, listing all the objects in a bucket with a large number of objects can be time-consuming and may require pagination.

- Request Rate: Amazon S3 supports a high request rate, but if you need to perform a large number of requests per second, you may need to use techniques like prefix partitioning to distribute the load.

Related Exam Concepts (AWS SAA-C03)

Understanding Amazon S3 buckets is just one piece of the puzzle when it comes to the SAA-C03 exam. Here are some related concepts that you should be familiar with:

- Storage Classes: Amazon S3 offers several storage classes, including S3 Standard, S3 Intelligent-Tiering, S3 Standard-IA (Infrequent Access), S3 One Zone-IA, and S3 Glacier. Each storage class is optimized for different use cases, and understanding when to use each one is crucial for the exam.

- Data Transfer: The SAA-C03 exam may test your knowledge of data transfer costs and methods, including data transfer into and out of Amazon S3, as well as data transfer between AWS regions.

- Security and Compliance: You should be familiar with Amazon S3 security features, such as bucket policies, access control lists (ACLs), and encryption options (SSE-S3, SSE-KMS, SSE-C). Additionally, you should understand how to use Amazon S3 for compliance with various regulatory requirements.

- Data Management: The exam may also cover topics related to data management in Amazon S3, such as lifecycle policies, cross-region replication, and event notifications.

Best Practices for Managing Large S3 Buckets

Managing large S3 buckets can be challenging, but following best practices can help you optimize performance, reduce costs, and ensure data security. Here are some best practices to consider:

- Use Prefix Partitioning: If you have a large number of objects in a bucket, consider using prefix partitioning to distribute the objects across multiple prefixes. This can help improve performance by reducing the number of objects in each prefix.

- Implement Lifecycle Policies: Use lifecycle policies to automatically transition objects to different storage classes or delete them after a specified period. This can help you optimize storage costs and manage data retention.

- Enable Versioning: Enable versioning to protect against accidental deletions and overwrites. Versioning allows you to keep multiple versions of an object, which can be useful for data recovery.

- Use Multipart Upload: For large objects, use the multipart upload feature to upload the object in parts. This can improve upload performance and allow you to resume interrupted uploads.

- Monitor and Optimize Costs: Regularly monitor your S3 usage and costs using AWS Cost Explorer and AWS Budgets. Consider using S3 Intelligent-Tiering to automatically optimize storage costs based on access patterns.

- Secure Your Data: Implement security best practices, such as enabling encryption, using bucket policies and ACLs to control access, and regularly auditing your S3 buckets for security vulnerabilities.

Common Misconceptions About S3 Bucket Size

There are several common misconceptions about Amazon S3 bucket size that can lead to confusion. Let's address some of these misconceptions:

1. Misconception: There is a maximum size limit for an S3 bucket.

- Reality: As mentioned earlier, there is no maximum size limit for an S3 bucket. You can store an unlimited amount of data in a single bucket.

2. Misconception: You can only store a limited number of objects in an S3 bucket.

- Reality: There is no limit to the number of objects you can store in an S3 bucket. However, listing a large number of objects can be time-consuming and may require pagination.

3. Misconception: Large S3 buckets are difficult to manage.

- Reality: While managing large S3 buckets can be challenging, following best practices such as prefix partitioning, lifecycle policies, and versioning can help you effectively manage large buckets.

4. Misconception: Amazon S3 is only suitable for small-scale storage.

- Reality: Amazon S3 is designed to scale to meet the needs of businesses of all sizes, from small startups to large enterprises. It is capable of storing and managing petabytes of data.

Conclusion

Amazon S3 is a powerful and versatile storage service that plays a critical role in the AWS ecosystem. For those preparing for the AWS Certified Solutions Architect - Associate (SAA-C03) exam, understanding Amazon S3 buckets is essential. By mastering the concepts of S3 bucket size, best practices for managing large buckets, and related exam topics, you can confidently tackle the SAA-C03 exam and design robust, scalable, and secure cloud-based solutions.

Remember, the key to success in the SAA-C03 exam is not just memorizing facts but understanding how to apply AWS services to real-world scenarios. With a solid grasp of Amazon S3 and its features, you'll be well on your way to becoming an AWS Certified Solutions Architect. Good luck!

Special Discount: Offer Valid For Limited Time “SAA-C03 Exam” Order Now!

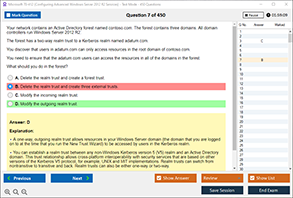

Sample Questions for AWS SAA-C03 Dumps

Actual exam question from AWS SAA-C03 Exam.

What is the maximum size of an Amazon S3 bucket?

A) 1 TB

B) 100 TB

C) 5 PB

D) Unlimited